Across the last year, I have had many opportunities to help my clients not only choose between MaxDiff and Conjoint but to understand why its the best choice. This way my clients are equipped to have a productive conversation with their clients and be an expert in that conversation. Several times, MaxDiff has been the way to go, a couple of times a modified form of MaxDiff, and then there have been those situations where Conjoint (or Choice Modeling) has been the best way to go.

I feel that I am very well qualified to provide this guidance. On the Conjoint side, my PhD supervisor and mentor, Dr. Jordan Louviere, is the father of marketing-based discrete choice modeling and a true academic superstar with hundreds of publications. Having his guidance and starting my own consulting during my PhD ensured that I have the best training and in-depth understanding of the methods. Since then I have published a book, written academic journal articles, and had many presentations around the world on these subjects. On the MaxDiff side, I was on the research team that originally developed and proved the approach, then called Best/Worst Scaling.

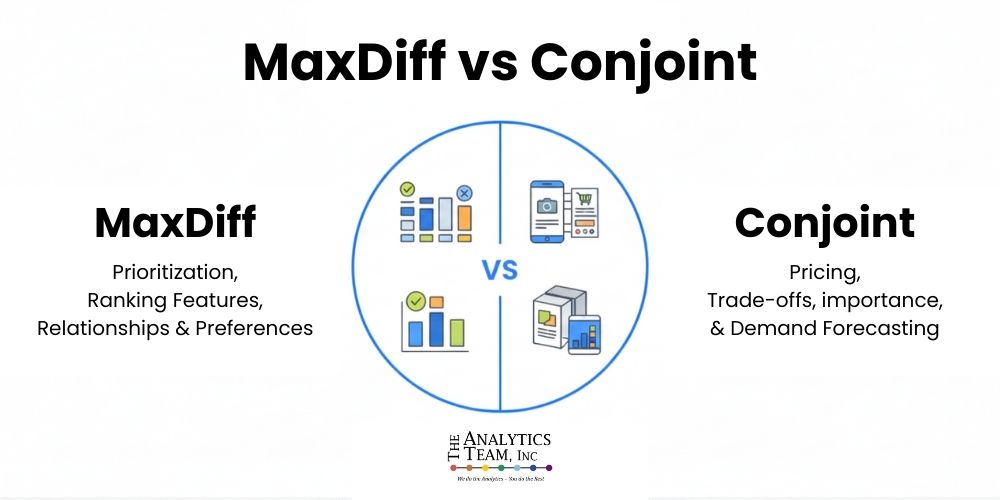

Choosing between MaxDiff vs Conjoint shouldn’t feel like guesswork. Both methods are powerful, but they solve completely different research problems. The issue? Most teams use MaxDiff vs Conjoint interchangeably. That leads to confusing data, unclear product priorities, and insights that don’t reflect real customer choices.

The good news: Once you understand what MaxDiff vs Conjoint actually measures, the decision becomes simple. In this guide by The Analytics Team, you’ll get a clear, practical framework for when to use MaxDiff vs Conjoint, real examples, design best practices, and a step-by-step workflow used by top research teams.

Choosing between MaxDiff and Conjoint often comes down to what decision you actually need to support. This video explains how to make that call.

MaxDiff vs Conjoint — The Big Picture

Before diving into scenarios, let’s clarify each method’s job:

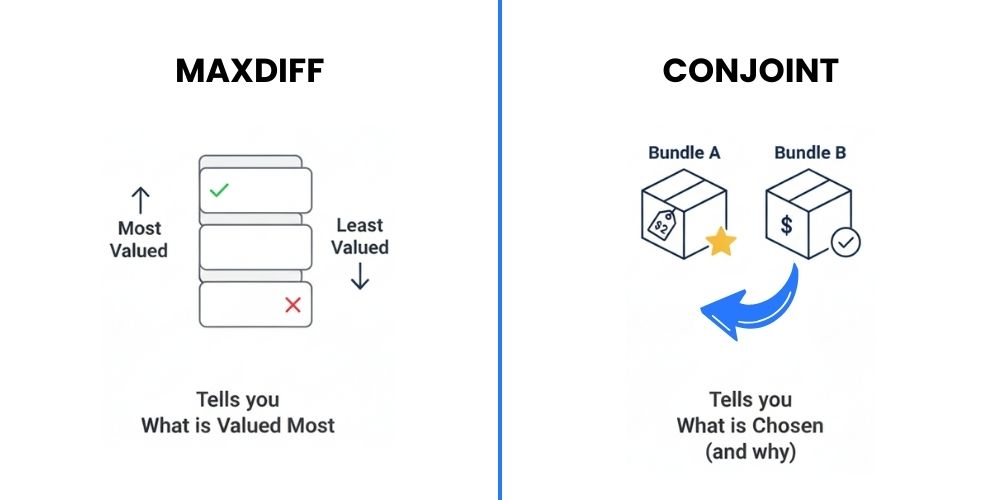

● MaxDiff = Preference Ranking

It tells you what people value most (and least) from a list.

● Conjoint = Trade-Off Simulation

It tells you how people make decisions when choosing product bundles with different attributes and prices.

Both MaxDiff vs Conjoint are choice-based, but they work at different levels of complexity.

What MaxDiff Really Measures (And Why It’s So Reliable)

MaxDiff, also called Best-Worst Scaling, asks respondents to repeatedly pick the most appealing and least appealing item.

These repeated comparisons produce ratio-scale scores, clean rank-ordered lists, clear preference separation, and avoid rating bias.

MaxDiff in Plain English: Instead of rating items 7/10, respondents choose which item to keep or remove, removing scale bias and cultural response issues.

Best Use Cases for MaxDiff vs Conjoint:

Use MaxDiff when you need to prioritize messaging elements, product features, benefits, UI/UX improvements, or early-stage scoping. MaxDiff is also great for shortlisting items before considering Conjoint (Choice Modeling or DCM) analysis.

Why MaxDiff Is So Accurate:

MaxDiff is heads and shoulders in predictive accuracy over self-report importance or derived importance based on rating scales. Respondents make simple, fast comparisons. MaxDiff works well with large lists (20–50+ items) and produces ratio-level scores, making it ideal for global audiences. Because it avoids use of rating scales we don’t need to worry about cultural differences in scale use that can make global work difficult to execute and interpret.

MaxDiff Weaknesses:

It can’t handle pricing or simulate real-world trade-offs, unlike Conjoint.

Takeaway: Use MaxDiff for prioritization and shortlisting — not pricing or product configuration in MaxDiff vs Conjoint decisions.

MaxDiff Variants

Many sites highlight conjoint variations. Here at The Analytics Team, here’s a complete overview:

- Standard MaxDiff — Industry standard for finding the relative value of items.

- Anchored MaxDiff — Includes an “action threshold” anchor item, through follow-up questions on each MaxDiff screen. This threshold answers the question of how good is “good enough” in the relative listing of items. Often clients want to know which items are strong enough to evoke an action like purchase.

- MaxDiff with TURF —This is a very common combination and often a strongly recommended approach when using MaxDiff to choose a set of items for inclusion, promotion or communications. It’s likely that the top most influential items in a MaxDiff test appeal to most of the same people, thus including both in a short action list is counter-productive. A TURF analysis that uses the MaxDiff transformed or probability-based results is the best way to maximize the impact of applying MaxDiff results. TURF is best done with Anchored MaxDiff.

- MaxDiff with Simulator (e.g. TURF Tool) — This combination extends the power of MaxDiff to do what-if simulation testing of the impact of combinations of items on likelihood to buy and run TURF analyses in extended scenarios of forced exclusion or inclusion of items in the TURF search. Best done with Anchored MaxDiff.

- Adaptive MaxDiff (Adaptive, Bandit etc) – When there are too many items to test for a given sample size, an adaptive approach can be best when the objective is to eliminate the worst performers and accurately rank the most important ones. In other words you are not interested in the relative value of the lower 50% or 70% of items you are testing in your list.

Takeaway: Choose the Conjoint method that matches your product complexity and decision context.

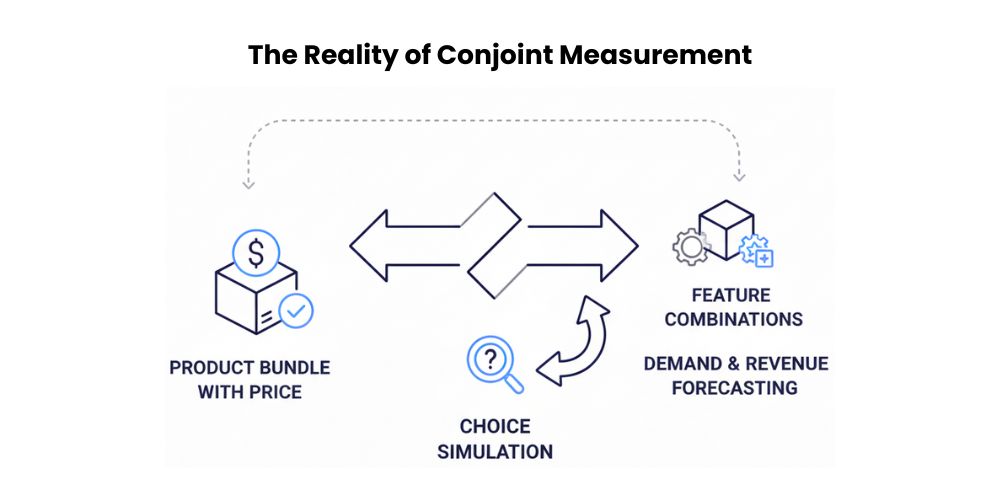

What Conjoint Really Measures (The Only Tool for Trade-Offs)

Conjoint Analysis is the gold standard in MaxDiff vs Conjoint for understanding real-world choices. It shows respondents side-by-side product profiles with varying plans, prices, storage, and support options, asking for their preferred choice.

What You Get From Conjoint: Attribute importance, relative impact of making changes between levels of attributes, price sensitivity, optimal bundles, market simulations, share of preference and demand/revenue/profitability projections.

Best Use Cases for Conjoint vs MaxDiff:

Use Conjoint when customers must make trade-offs, including pricing studies, product optimization, portfolio decisions, competitor comparisons, and realistic purchase simulations.

Conjoint Weaknesses:

Too many attributes cause fatigue, it requires careful design, larger samples, and is more complex than MaxDiff.

Takeaway: Use Conjoint (Choice Modeling or DCM) when decisions involve trade-offs, especially pricing, bundling, and predicting market behavior.

MaxDiff vs Conjoint — Complete Comparison Table

Factor | MaxDiff | Conjoint |

Purpose | Prioritization | Trade-offs & decision modeling |

Answers | “What matters most?” | “Which option would you choose?” |

Output | Ranked list | Utilities + simulations |

Complexity | Simple | Moderate-to-high |

Pricing | Not suitable | Ideal |

Best for | Features, messages, ideas | Configurations, prices, bundles |

Sample size | Medium | Medium-large |

Cognitive load | Low | Medium |

Conjoint Variants

Many sites highlight conjoint variations. Here at The Analytics Team, here’s a complete overview:

- Choice-Based Conjoint (CBC, DCM or Choice Modeling) — Industry standard simulating real decisions.

- Adaptive Choice Modeling (Adaptive Choice, ACBC) — Adapts each respondents’ task to focus more on a smaller number of more important attributes or levels. May be able to tackle larger numbers of attributes and levels, naturally focusing more on the more important ones.

- Multi-Choice, Allocation and Menu-Based Conjoint (Multi-Choice Models, MBC) — Builds custom products; great for telecom, insurance, and food.

- Hybrid Choice Modeling (e.g., Combining MaxDiff and Choice) — Handles many attributes beyond manageable limits.

Takeaway: Choose the Conjoint method that matches your product complexity and decision context.

Design Best Practices

For MaxDiff vs Conjoint:

- MaxDiff: Use 12-14 screens per respondent and 4 items per set, balanced exposure, and avoid too many repeats. Design balance between respondents is very important.

- Conjoint: Keep attributes under 12, limit levels, avoid illogical or combos of attributes/levels or illogical or completely dominant alternatives in choice sets, pretest, and keep surveys under 20 minutes.

Sample Sizes: MaxDiff: 150–300+; Conjoint: 300–600+ (depending on attributes).

Mistakes That Lead to Bad Data (And How to Fix Them)

- Using MaxDiff for pricing — fix by using Conjoint.

- Too many Conjoint attributes — fix by shortlisting with MaxDiff first.

- Treating MaxDiff scores like purchase probabilities — remember they’re priorities, not demand drivers.

- Wrong sample sizes — small samples ruin segmentation.

- Ignoring design balance — unequal exposure skews results.

Real-World Examples

Example A: SaaS Pricing

MaxDiff ranks features; Conjoint tests bundles and pricing.

Example B: Consumer Packaged Goods

MaxDiff identifies top flavors; Conjoint models price tiers.

Example C: Financial Services

MaxDiff ranks tools; Conjoint simulates bundles and churn.

The Ideal Workflow (Used by Top Research Teams)

- Start with MaxDiff for prioritization.

- Feed results into Conjoint for pricing and optimization.

- Run scenario simulators for winning products and revenue forecast.

Conclusion

MaxDiff vs Conjoint (Choice Models or DCM) aren’t competing—they are complementary. The Analytics Team recommends combining MaxDiff for prioritization with Conjoint for optimization to get clarity, accuracy, and realistic decision modeling that no other method matches. For any questions or to discuss how these methods can best support your business goals, schedule a free 30-minute consultation call with Dr. Chris Diener, our lead analytics expert.

Frequently Asked Questions on MaxDiff vs Conjoint

Q: What is the main difference between MaxDiff and Conjoint?

A: MaxDiff focuses on scaling individual items by preference, while Conjoint simulates trade-offs between product attribute bundles and prices.

Q: When should I use MaxDiff vs Conjoint?

A: Use MaxDiff for prioritizing features or messages; use Conjoint for pricing, bundling, and simulating real-world purchase decisions.

Q: Can MaxDiff measure price sensitivity?

A: No. Pricing is best measured by Conjoint analysis.

Q: How many items can MaxDiff handle?

A: Typically 20–50+ items; Conjoint handles fewer attributes but provides more details with levels and the real world decision trade-offs with the interactions between them.

Q: Can I combine MaxDiff and Conjoint in one study?

A: Yes, many teams use MaxDiff to shortlist and then Conjoint to optimize product configurations.